We’d like to share a recent research project where our AgileX Robotics PiPER 6-DOF robotic arm was used to validate UniVLA, a novel cross-morphology policy learning framework developed by the University of Hong Kong and OpenDriveLab.

Paper: Learning to Act Anywhere with Task-Centric Latent Actions

arXiv: [2505.06111] UniVLA: Learning to Act Anywhere with Task-centric Latent Actions

Code: GitHub - OpenDriveLab/UniVLA: [RSS 2025] Learning to Act Anywhere with Task-centric Latent Actions

Motivation

Transferring robot policies across platforms and environments is difficult due to:

- High dependence on manually annotated action data

- Poor generalization between different robot morphologies

- Visual noise (camera motion, background movement) causing instability

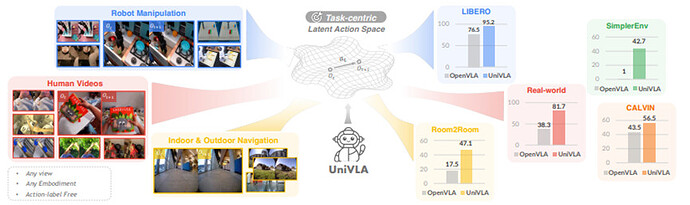

UniVLA addresses this by learning latent action representations from videos, without relying on action labels.

Framework Overview

UniVLA introduces a task-centric, latent action space for general-purpose policy learning. Key features include:

- Cross-hardware and cross-environment transfer via a unified latent space

- Unsupervised pretraining from video data

- Lightweight decoder for efficient deploymen

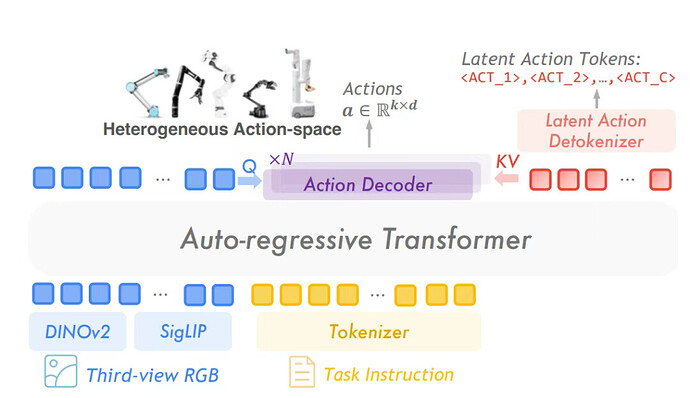

Figure2: Overview of the UniVLA framework. Visual-language features from third-view RGB and task instruction are tokenized and passed through an auto-regressive transformer, generating latent actions which are decoded into executable actions across heterogeneous robot morphologies.

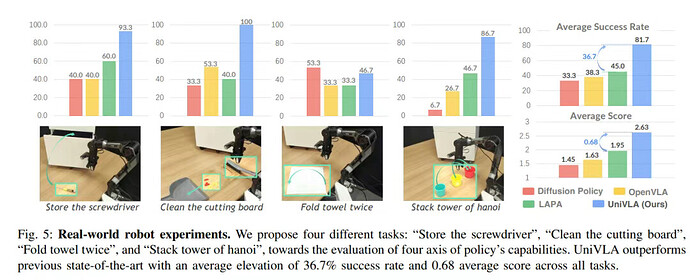

PiPER in Real-World Experiments

To validate UniVLA’s transferability, the researchers selected the AgileX PiPER robotic arm as the real-world testing platform.

Tasks tested:

- Store a screwdriver

- Clean a cutting board

- Fold a towel twice

- Stack the Tower of Hanoi

These tasks evaluate perception, tool use, non-rigid manipulation, and semantic understanding.

Experimental Results

- Average performance improved by 36.7% over baseline models

- Up to 86.7% success rate on semantic tasks (e.g., Tower of Hanoi)

- Fine-tuned with only 20–80 demonstrations per task

- Evaluated using a step-by-step scoring system

About PiPER

PiPER is a 6-DOF lightweight robotic arm developed by AgileX Robotics. Its compact structure, ROS support, and flexible integration make it ideal for research in manipulation, teleoperation, and multimodal learning.

Learn more: PiPER

Company website: https://global.agilex.ai

Click the link below to watch the experiment video using PIPER:

Collaborate with Us

At AgileX Robotics, we work closely with universities and labs to support cutting-edge research. If you’re building on topics like transferable policies, manipulation learning, or vision-language robotics, we’re open to collaborations.

Let’s advance embodied intelligence—together.